Top AI Voice Trends for 2025

Top AI Voice Trends for 2025

AI voice technology in 2025 is transforming how creators produce content and connect with audiences. Key advancements include:

- Natural Language Processing (NLP): AI now understands slang, idioms, and emotional cues, enabling more natural interactions.

- Multilingual & Accent Support: Enhanced language and accent capabilities break barriers for global communication.

- Real-Time Voice Translation: Instant, accurate translations for seamless multilingual conversations.

- AR & VR Integration: AI voices enhance immersive experiences in virtual and augmented reality.

- Improved Voice Synthesis: AI-generated voices sound more human, with emotional depth and context-aware dialogue.

- Emotional Voice Modulation: AI conveys emotions like joy or empathy, making content more engaging.

- Tools like StoryShort AI: Simplify voiceover creation for short-form videos with features like script generation and localization.

These advancements are reshaping content creation, making it faster, more accessible, and globally connected.

AI Voice & Chatbot Trends in 2025

1. Improved Natural Language Processing (NLP)

By 2025, advancements in Natural Language Processing (NLP) are making AI voices feel more natural and relatable. Modern AI systems now understand complex language elements like slang, idiomatic expressions, and cultural references, creating smoother and more intuitive interactions [2].

Some key updates include:

- Context Understanding: AI can follow nuanced conversations and tailor its responses to fit the situation.

- Sentiment Analysis: Systems pick up on emotional cues through tone and inflection [2].

- Complex Command Processing: Users can give detailed, natural instructions without needing to simplify their language.

"Voice AI will also address the problems of accessibility enabling visually impaired people or people who are less tech savvy to interact with an AI seamlessly." [5]

The voice commerce market is benefiting significantly from these advancements, with projections showing growth from USD 41 billion in 2021 to USD 290 billion by 2025 [5]. For creators, this means new opportunities to develop smarter, more relatable voice experiences that connect with a wide range of users.

AI's enhanced Natural Language Understanding now captures subtle elements like humor and sarcasm [5]. This paves the way for more engaging and culturally aware voice content. Creators can use these capabilities to design personalized, context-sensitive voice interactions that align with individual user preferences and behaviors [2].

Additionally, improvements in multilingual processing are helping AI voice technology reach audiences across the globe.

2. Multilingual and Accent Support

AI voice technology in 2025 is pushing past language barriers with advanced multilingual and accent features, delivering highly accurate and natural-sounding results.

These AI systems now understand intricate linguistic details across multiple languages, including idioms, slang, and cultural references. This allows them to provide voice interactions that feel natural and culturally appropriate [2]. They can also adjust to regional accents, making interactions more personal while learning from a wide range of speech patterns over time [2] [3].

What makes this technology stand out is its ability to tailor communication based on cultural nuances. It can modify tone, formality, and even regional expressions to match the cultural context [2]. For content creators, this opens up new ways to connect with audiences across different languages and cultures [4].

Some standout features include:

- Localized voice outputs that sound natural in different languages.

- Accent adaptability to reflect regional speech patterns.

- Cultural sensitivity in tone and expressions.

These advancements are especially useful for educational materials, entertainment, and global business communication, where maintaining the subtleties of natural speech is crucial. Work is also ongoing to improve support for less common languages, paving the way for smoother global communication in content creation [2] [4].

3. Real-Time Voice Translation

Real-time voice translation has taken global communication to a new level by enabling instant translations of spoken language. Thanks to advanced NLP algorithms, these translations are now more accurate and context-aware, handling the nuances of live conversations with impressive precision [2].

By 2025, this technology integrates with AR and VR, allowing users to communicate naturally across languages in immersive virtual spaces. Whether in healthcare settings or global classrooms, it bridges language barriers, enabling smooth communication and collaboration across various industries [2].

For content creators, real-time voice translation unlocks exciting opportunities for global reach. Tools like StoryShort AI make it possible to create multilingual voiceovers for videos and podcasts without the need for extra recording sessions. This means creators can connect with audiences worldwide through live streams, webinars, and interactive content, making real-time engagement across languages more accessible than ever [2] [5].

However, challenges remain. Refining the ability to recognize cultural nuances, reducing technical delays, and improving support for regional accents are key areas for improvement in 2025. Despite these hurdles, the technology continues to evolve, helping creators communicate naturally and effectively with global audiences [2].

Beyond personal interactions, this innovation is reshaping how creators connect with international audiences. As advancements in emotional intelligence and cultural sensitivity progress, real-time voice translation is becoming a must-have tool for crafting content that resonates across borders [2] [5].

4. Integration with AR and VR

AI voice technology is reshaping how we interact with AR and VR, making digital environments feel more immersive and interactive by 2025. In augmented reality (AR), AI voices can respond intelligently to a user's surroundings, offering personalized guidance in areas like education and medical training. In virtual reality (VR), this technology enables adaptive dialogue that reacts to user behavior, which is especially useful in training simulations where virtual instructors provide tailored feedback.

For creators, AI voice tools simplify workflows by enabling real-time voice generation, automated localization, and dynamic character interactions. Automated localization ensures AR/VR content feels natural and relevant for users across different languages and cultures. These tools open up new ways to create engaging stories and interactive experiences in gaming, education, and professional training.

AI voice also improves accessibility in AR/VR with features like audio descriptions for visually impaired users and voice-activated navigation. However, developers and content creators need to address some key challenges when implementing AI voice in these environments:

| Consideration | Impact | Solution Approach |

|---|---|---|

| Audio Quality & Latency | Affects immersion | Use high-quality, real-time audio processing |

| Context Awareness | Needed for natural interaction | Incorporate analysis of environment and user behavior |

| Accessibility | Supports inclusive usage | Design with multiple interaction modes |

The combination of AI voice and AR/VR is transforming how we experience digital content, making interactions more intuitive, engaging, and inclusive. As these technologies evolve, advancements in AI voice synthesis are further boosting the realism and emotional depth of these digital experiences [2] [5].

5. AI Voice Synthesis Improvements

By 2025, AI voice synthesis has reached a new level of realism, mastering natural speech patterns, emotional depth, and context-aware dialogue. These advancements make AI-generated voices nearly indistinguishable from human ones, even in complex conversations.

AI-generated voices now replicate tone, rhythm, and subtle emotional cues, creating speech that feels natural and engaging. This allows content creators to produce consistent, human-like voiceovers more efficiently while maintaining the emotional connection that's key to impactful content [4].

With integration into advanced NLP systems, these voices can dynamically adjust their speaking style based on context or audience feedback [4]. Mackenzie Ferguson highlights this shift:

"The evolution of AI assistants is rapidly unfolding with the integration of voice and vision capabilities, which is expected to dramatically transform how humans interact with technology." [6]

From real-time voiceovers to personalized assistants, this technology is changing how creators deliver content. AI systems can now create realistic voices with just a few minutes of recorded speech, making voice cloning faster and more accessible. This benefits applications like content creation, digital assistants, and voice preservation, offering real-time voice generation and context-aware responses [1] [4].

Modern AI voice systems have overcome earlier challenges in capturing emotional nuances and complex linguistic patterns. They now effectively replicate variations in tone, pitch, and rhythm, making them ideal for creating more engaging and personalized digital experiences across various platforms [4].

These advancements are reshaping tools like StoryShort AI, paving the way for more emotionally expressive and versatile AI voices in content creation.

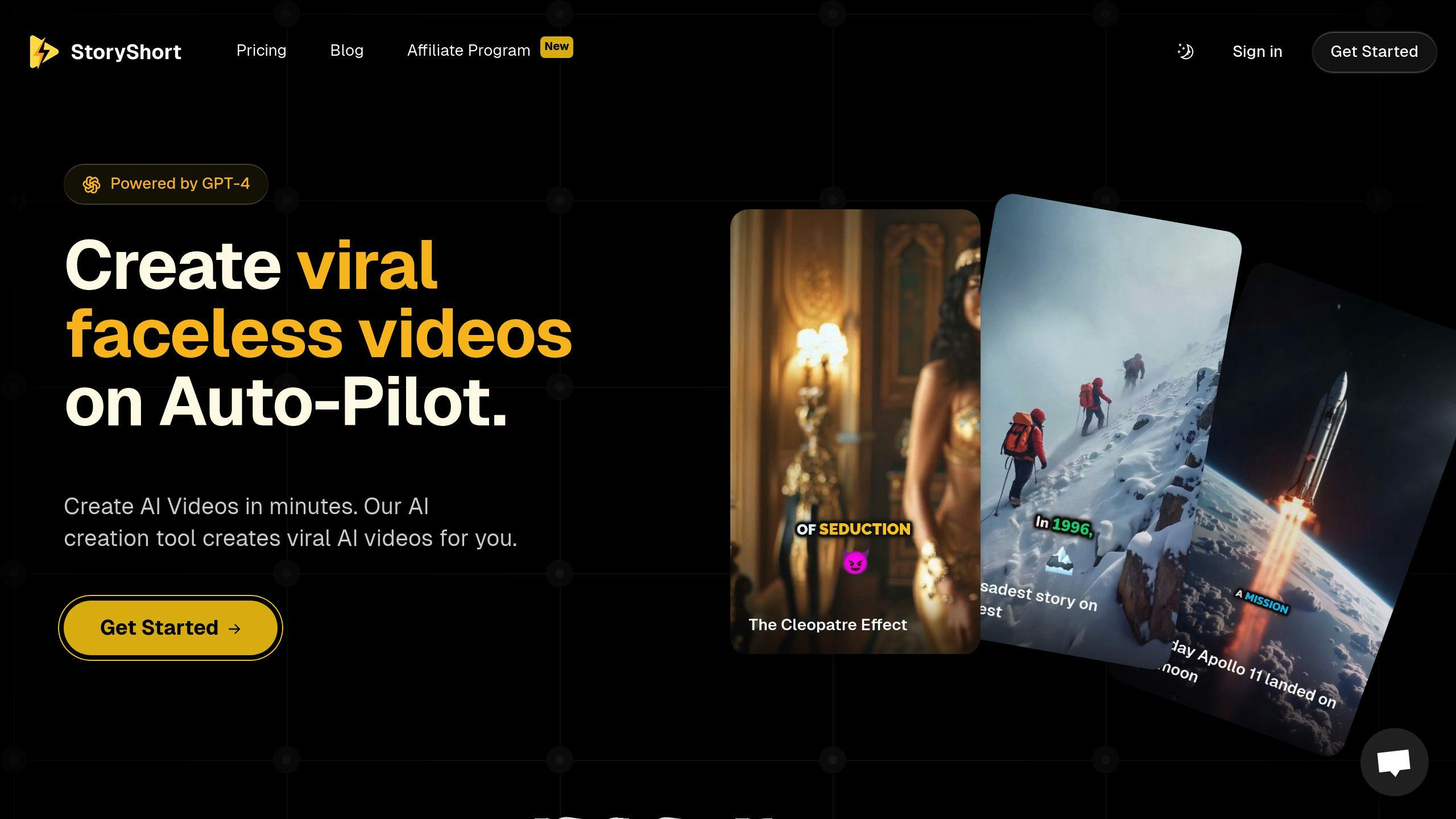

6. StoryShort AI

StoryShort AI is making waves in how creators use AI voice technology for short-form video content on platforms like TikTok and YouTube Shorts. It’s a tool designed to simplify the process of creating professional voiceovers without the need for traditional recording setups.

The platform combines voice synthesis with automatic script generation, helping creators save time and effort. By merging these features, it allows for faster production of high-quality content, especially for those managing large volumes of work.

StoryShort AI offers different subscription plans. The Hobby plan provides basic voiceover tools, while the Influencer plan includes advanced automation and a broader range of voice options. Beyond voiceovers, the platform also integrates features like background music selection, caption generation, and script writing, creating a streamlined, all-in-one solution for content creators.

This approach eliminates many technical hurdles, letting creators focus on their ideas and strategies. StoryShort AI demonstrates how AI voice tools are reshaping content production, making it faster and more scalable for creators everywhere.

7. Emotional Voice Modulation by AI

Emotional voice modulation is changing the game for content creators, making AI-generated speech more relatable and engaging. This technology helps AI voices convey emotions like joy, empathy, or concern, allowing creators to connect with their audiences on a deeper level.

Using advanced natural language processing (NLP) and machine learning, AI analyzes text cues to produce the right emotional tone. This means creators can generate emotion-matched voiceovers without needing extra takes or hiring voice actors. Here’s how it benefits different types of content:

| Content Type | Benefits of Emotional Modulation |

|---|---|

| Storytelling | Adds depth and emotional impact |

| Educational Content | Makes learning more engaging |

| Marketing Materials | Aligns emotional tone with brand messaging |

| Accessibility Tools | Provides expressive voices for non-verbal users |

While traditional voice acting still offers a more nuanced touch, AI stands out for its consistency and scalability. Developers are focusing on narrowing the gap by improving AI's emotional intelligence, aiming for even more natural results by 2025.

Real-time emotional adaptation is another exciting development. AI systems are now learning to adjust emotional tones dynamically, enhancing experiences in areas like interactive content, virtual assistants, and accessibility tools.

For creators looking to use emotional voice modulation, there are a few key points to keep in mind:

- Brand Alignment: Choose voice styles and emotional ranges that reflect your brand's identity.

- Ethical Transparency: Clearly communicate when content includes AI-generated voices.

- Context Matters: Ensure the emotional tone matches the content's purpose and audience expectations.

This technology also has enormous potential in accessibility. For example, when paired with brain-computer interfaces, it could provide expressive voices for individuals with speech impairments.

As emotional voice modulation continues to advance, it’s becoming an essential tool for creating content that truly resonates with audiences across various platforms.

Conclusion

AI voice technology is making a big impact in 2025. With advancements in Natural Language Processing, multilingual support, and emotional voice modulation, it's offering new opportunities for both creators and businesses.

This year, the focus is on developing conversational, human-like voices that connect better with audiences. From global communication to immersive media, these trends are reshaping how content is made. Tools like StoryShort AI show how creators can produce engaging content quickly while keeping quality intact.

Developers are also pushing the boundaries of voice synthesis by capturing the subtleties of human speech, like tone, stress, and micro-expressions [4]. These improvements are helping creators craft content that feels more relatable and meaningful to a diverse range of audiences.

Beyond technical progress, AI voice technology is changing how we think about content creation and audience interaction. It’s paving the way for new forms of creative expression and easier global communication.

Creators who stay updated and find the right balance between innovation and staying genuine will thrive. By using these tools, they can explore fresh ways to connect and create in the fast-changing digital world.

FAQs

What do YouTubers use for text to speech?

In 2025, many creators rely on advanced text-to-speech tools to streamline video production. Some of the most widely used tools include:

| Text-to-Speech Tool | Key Features |

|---|---|

| Murf AI | Offers voice customization and a variety of accents |

| ElevenLabs | Delivers high-quality voice synthesis with emotional depth |

| Google TTS | Known for reliability and broad language support |

| Speechify | Provides natural-sounding voices with simple integration |

| NaturalReader | Features an easy-to-use interface and diverse voice options |

Tools like Murf AI, ElevenLabs, and StoryShort AI have become essential for creators. They provide options such as personalized voices, emotional expression, and seamless integration, making it easier to create engaging and professional content. StoryShort AI even combines text-to-speech with video editing tools, simplifying workflows for faster, polished results.

These advancements in AI voice technology are transforming content creation by offering features like emotional modulation, support for multiple languages, and real-time voice synthesis [2] [3] [4].